http://edge.org/annual-

What Do You Think About Machines That Think?

¿Qué piensas, acerca de las máquinas que piensan?

El reto de las máquinas pensantes | Ciencia | EL MUNDO

http://www.elmundo.es/ciencia/

El robot HAL en '2001: Una Odisea del espacio', de Kubrick, un icono de la inteligencia artificial.

"¿Qué piensa usted sobre las máquinas que piensan?" Ésta es la pregunta que la revista digital Edge

ha lanzado, como todos los años por estas fechas, a algunas de las

mentes más brillantes del planeta. Hace poco más de un mes, a principios

de diciembre, Stephen Hawking alertó sobre las

consecuencias potencialmente apocalípticas de la inteligencia

artificial, que en su opinión podría llegar a provocar "el fin de la

especie humana". Pero, ¿realmente debemos temer el peligro de un futuro

ejército de humanoides fuera de control? ¿O más bien deberíamos celebrar

las extraordinarias oportunidades que podría brindarnos el desarrollo

de máquinas pensantes, e incluso sintientes? Semejantes seres, además,

nos plantearían nuevos dilemas éticos. ¿Formarían parte de nuestra

"sociedad"? ¿Deberíamos concederles derechos civiles? ¿Sentiríamos

empatía por ellos? Un año más, algunos de los pensadores y científicos

más relevantes del mundo han aceptado el reto intelectual planteado por

el editor de Edge, John Brockman. Ésta es tan sólo una selección de

algunas de las respuestas más interesantes.

llllllllllllllllllllllllllllllllllllllllllllllllllllllllllllllllllllllllllllllllllllllllllllllllllllllllllllllllllllllllllllllllllllllllllllllllllllllllllllllllllllllllllllllllllllllllllllllllllllllllllllllNick Bostrom. Director del Instituto para el Futuro de la Humanidad de Oxford:

Creo que, en general, la gente se precipita al dar su opinión sobre este tema, que es extremadamente complicado. Hay una tendencia a adaptar cualquier idea nueva y compleja para que se amolde a un cliché que nos resulte familiar. Y por algún motivo extraño, muchas personas creen que es importante referirse a lo que ocurre en diversas películas y novelas de ciencia ficción cuando hablan del futuro de la inteligencia artificial. Mi opinión es que ahora mismo, a las máquinas se les da muy mal pensar (excepto en unas pocas y limitadas áreas). Sin embargo, algún día probablemente lo harán mejor que nosotros (al igual que las máquinas ya son mucho más fuertes y rápidas que cualquier criatura biológica). Pero ahora mismo hay poca información para saber cuánto tiempo tardará en surgir esta superinteligencia artificial. Lo mejor que podemos hacer ahora mismo, en mi opinión, es impulsar y financiar el pequeño pero pujante campo de investigación que se dedica a analizar el problema de controlar los riesgos futuros de la superinteligencia. Será muy importante contar con las mentes más brillantes, de tal manera que estemos preparados para afrontar este desafío a tiempo.Daniel C. Dennett. Filósofo en el Centro de Estudios Cognitivos de la Universidad de Tufts

La Singularidad -el temido momento en el que la Inteligencia Artificial (IA) sobrepasa la inteligencia de sus creadores- tiene todas las características clásicas de una leyenda urbana: cierta credibilidad científica ("Bueno, en principio, ¡supongo que es posible!") combinada con un deliciosamente escalofriante clímax ("¡Nos dominarán los robots!"). Tras décadas de alarmismo sobre los riesgos de la IA, podríamos pensar que la Singularidad se vería a estas alturas como una broma o una parodia, pero ha demostrado ser un concepto extremadamente persuasivo. Si a esto le añadimos algunos conversos ilustres (Elon Musk, Stephen Hawking...), ¿cómo no tomárnoslo en serio? Yo creo, al contrario, que estas voces de alarma nos distraen de un problema mucho más apremiante. Tras adquirir, después de siglos de duro trabajo, una comprensión de la naturaleza que nos permite, por primera vez en la Historia, controlar muchos aspectos de nuestro destino, estamos a punto de abdicar este control y dejarlo en manos de entes artificiales que no pueden pensar, poniendo a nuestra civilización en modo auto-piloto de manera prematura. Internet no es un ser inteligente (salvo en algunos aspectos), pero nos hemos vuelto tan dependientes de la Red que si en algún momento colapsara, se desataría el pánico y podríamos destruir nuestra sociedad en pocos días. El peligro real, por lo tanto, no son máquinas más inteligentes que nosotros, que podrían usurpar nuestro papel como capitanes de nuestro destino. El peligro real es que cedamos nuestra autoridad a máquinas estúpidas, otorgándoles una responsabilidad que sobrepasa su competencia.Frank Wilczek. Físico del Massachussetts Institute of Technology (MIT) y Premio Nobel

Francis Crick la denominó la "Hipótesis Asombrosa": la conciencia, también conocida como la mente, es una propiedad emergente de la materia. Conforme avanza la neurociencia molecular, y los ordenadores reproducen cada vez más los comportamientos que denominamos inteligentes en humanos, esa hipótesis parece cada vez más verosímil. Si es verdad, entonces toda inteligencia es una inteligencia producida por una máquina [ya sea un cerebro o un sistema operativo]. Lo que diferencia a la inteligencia natural de la artificial no es lo que es, sino únicamente cómo se fabrica. David Hume proclamó que "la razón es, y debería ser, la esclava de las pasiones" en 1738, mucho antes de que existiera cualquier cosa remotamente parecida a la moderna inteligencia artificial. Aquella impactante frase estaba concebida, por supuesto, para aplicarse a la razón y las pasiones humanas. Pero también es válida para la inteligencia artificial: el comportamiento está motivado por incentivos, no por una lógica abstracta. Por eso la inteligencia artificial que me parece más alarmante es su aplicación militar: soldados robóticos, drones de todo tipo y "sistemas". Los valores que nos gustaría instalar en esos entes tendrían que ver con la capacidad para detectar y combatir amenazas. Pero bastaría una leve anomalía para que esos valores positivos desataran comportamientos paranoicos y agresivos. Sin un control adecuado, esto podría desembocar en la creación de un ejército de paranoicos poderosos, listos y perversos.John C. Mather. Astrofísico del Centro Goddard de la NASA y Premio Nobel

Las máquinas que piensan están evolucionando de la misma manera que, tal y como nos explicó Darwin, lo hacen las especies biológicas, mediante la competición, el combate, la cooperación, la supervivencia y la reproducción. Hasta ahora no hemos encontrado ninguna ley natural que impida el desarrollo de la inteligencia artificial, así que creo que será una realidad, y bastante pronto, teniendo en cuenta los trillones de dólares que se están invirtiendo por todo el mundo en este campo, y los trillones de dólares de beneficios potenciales para los ganadores de esta carrera. Los expertos dicen que no sabemos suficiente sobre la inteligencia como para fabricarla, y estoy de acuerdo; pero un conjunto de 46 cromosomas tampoco lo entiende, y sin embargo es capaz de dirigir su creación en nuestro organismo. Mi conclusión, por lo tanto, es que ya estamos impulsando la evolución de una inteligencia artificial poderosa, que estará al servicio de las fuerzas habituales: los negocios, el entretenimiento, la medicina, la seguridad internacional, la guerra, y la búsqueda de poder a todos los niveles: el crimen, el transporte, la minería, la industria, el comercio, el sexo, etc. No creo que a todos nos gusten los resultados. No sé si tendremos la inteligencia y la imaginación necesaria para mantener a raya al genio una vez que salga de la lámpara, porque no sólo tendremos que controlar a las máquinas, sino también a los humanos que puedan hacer un uso perverso de ellas. Pero como científico, me interesa mucho las potenciales aplicaciones de la inteligencia artificial para la investigación. Sus ventajas para la exploración espacial son obvias: sería mucho más fácil para estas máquinas pensantes colonizar Marte, e incluso establecer una civilización a escala galáctica. Pero quizás no sobrevivamos el encuentro con estas inteligencias alienígenas que fabriquemos nosotros mismos.Stephen Pinker. Catedrático de Psicología en la Universidad de Harvard

Un procesador de información fabricado por el ser humano podría, en principio, superar o duplicar nuestras propias capacidades cerebrales. Sin embargo, no creo que esto suceda en la práctica, ya que probablemente nunca exista la motivación económica y tecnológica necesaria para lograrlo. Sin embargo, algunos tímidos avances hacia la creación de máquinas más inteligentes han desatado un renacimiento de esa ansiedad recurrente basada en la idea de que nuestro conocimiento nos llevará al apocalipsis. Mi opinión es que el miedo actual a la tiranía de los ordenadores descontrolados es una pérdida de energía emocional; el escenario se parece más al virus Y2K que al Proyecto Manhattan. Para empezar, tenemos mucho tiempo para planificar todo esto. Siguen faltando entre 15 y 25 años para que la inteligencia artificial alcance el nivel del cerebro humano.Es cierto que en el pasado, los «expertos» han descartado la posibilidad de que surjan ciertos avances tecnológicos que después emergieron en poco tiempo. Pero lo contrario también es cierto: los «expertos» también han anunciado (a veces con gran pánico) la inminente aparición de avances que después jamás se vieron, como los coches impulsados por energía nuclear, las ciudades submarinas, las colonias en Marte, los bebés de diseño y los almacenes de zombis que se mantendrían vivos para suministrar órganos de repuesto a personas enfermas. Me parece muy extraño pensar que los desarrolladores de robots no incorporarían medidas de seguridad para controlar posibles riesgos. Y no es verosímil creer que la inteligencia artificial descenderá sobre nosotros antes de que podamos instalar mecanismos de precaución. La realidad es que el progreso en el campo de la inteligencia artificial es mucho más lento de lo que nos hacen creer los agoreros y alarmistas. Tendremos tiempo más que suficiente para ir adoptando medidas de seguridad ante los avances graduales que se vayan logrando, y los humanos mantendremos siempre el control del destornillador. Una vez que dejamos a un lado las fantasías de la ciencia ficción, las ventajas de una inteligencia artificial avanzada son verdaderamente emocionantes, tanto por sus beneficios prácticos, como por sus posibilidades filosóficas y científicas.

Yo, máquina pensante

Un año más, la revista digital Edge ha vuelto a estimular un apasionante debate intelectual de gran altura,

con la pregunta anual que lanza por estas fechas a algunas de las

mentes más brillantes de nuestro tiempo. En esta ocasión, su brillante

editor John Brockman ha planteado el reto de diseccionar las luces y

sombras de la inteligencia artificial (IA): «¿Qué piensa usted sobre las

máquinas que piensan?» Las respuestas reflejan una amplísima gama de

opiniones entre algunos de los grandes científicos y pensadores del

mundo actual, demostrando que no hay un consenso claro a la hora de

evaluar hasta qué punto debemos celebrar o temer el surgimiento de las

máquinas pensantes.

En un extremo encontramos al gran filósofo estadounidense Daniel C. Dennett, que se burla con mucha sorna de la «leyenda urbana» según la cual «los robots nos dominarán» en un futuro próximo. En el otro hallamos a científicos de la talla del astrofísico de la NASA y Premio Nobel John C. Mather, quien está convencido de que la inteligencia artificial «será una realidad, y bastante pronto», teniendo en cuenta la cantidad descomunal de dinero que ya se está invirtiendo en este campo, y los enormes beneficios potenciales que esperan a los emprendedores que construyan los primeros ordenadores con inteligencia humana (o sobrehumana).

Sin embargo, aunque los expertos no se ponen de acuerdo a la hora de predecir si falta mucho o poco tiempo para la era de la IA, sí existe un consenso muy amplio sobre la imparable llegada, antes o después, de esta revolución. El motivo lo explica muy bien el físico y también Premio Nobel Frank Wilczek, citando la famosa «hipótesis asombrosa» del codescubridor del ADN, Francis Crick: la mente humana no es más que «una propiedad emergente de la materia», y por lo tanto «toda inteligencia es una inteligencia producida por una máquina» (ya sea un cerebro formado por neuronas o un robot fabricado con chips de silicio).

Como me dijo en una inolvidable entrevista el gran neurocientífico español Rafael Yuste: "Dentro del cráneo no hay magia, la mente humana y todos nuestros pensamientos, nuestros recuerdos y nuestra personalidad, todo está basado en disparos de grupos de neuronas. No hay nada más, no hay un espíritu en el éter... Lo que hay es un gran desconocimiento sobre cómo funciona esta máquina. Pero estoy seguro de que la conciencia surge del sustrato físico que tenemos en el encéfalo".

Y por eso, como dice el biólogo George Church en su propia respuesta a la pregunta de Edge, «yo soy una máquina que piensa, hecha de átomos». Si esto es verdad, la aparición de otro tipo de máquinas que también puedan pensar es sólo cuestión de tiempo.

En un extremo encontramos al gran filósofo estadounidense Daniel C. Dennett, que se burla con mucha sorna de la «leyenda urbana» según la cual «los robots nos dominarán» en un futuro próximo. En el otro hallamos a científicos de la talla del astrofísico de la NASA y Premio Nobel John C. Mather, quien está convencido de que la inteligencia artificial «será una realidad, y bastante pronto», teniendo en cuenta la cantidad descomunal de dinero que ya se está invirtiendo en este campo, y los enormes beneficios potenciales que esperan a los emprendedores que construyan los primeros ordenadores con inteligencia humana (o sobrehumana).

Sin embargo, aunque los expertos no se ponen de acuerdo a la hora de predecir si falta mucho o poco tiempo para la era de la IA, sí existe un consenso muy amplio sobre la imparable llegada, antes o después, de esta revolución. El motivo lo explica muy bien el físico y también Premio Nobel Frank Wilczek, citando la famosa «hipótesis asombrosa» del codescubridor del ADN, Francis Crick: la mente humana no es más que «una propiedad emergente de la materia», y por lo tanto «toda inteligencia es una inteligencia producida por una máquina» (ya sea un cerebro formado por neuronas o un robot fabricado con chips de silicio).

Como me dijo en una inolvidable entrevista el gran neurocientífico español Rafael Yuste: "Dentro del cráneo no hay magia, la mente humana y todos nuestros pensamientos, nuestros recuerdos y nuestra personalidad, todo está basado en disparos de grupos de neuronas. No hay nada más, no hay un espíritu en el éter... Lo que hay es un gran desconocimiento sobre cómo funciona esta máquina. Pero estoy seguro de que la conciencia surge del sustrato físico que tenemos en el encéfalo".

Y por eso, como dice el biólogo George Church en su propia respuesta a la pregunta de Edge, «yo soy una máquina que piensa, hecha de átomos». Si esto es verdad, la aparición de otro tipo de máquinas que también puedan pensar es sólo cuestión de tiempo.

llllllllllllllllllllllllllllllllllllllllllllllllllllllllllllllllllllllllllllllllllllllllllllllllllllllllllllllllllllllllllllllllllllllllllllllllllllllllllllllllllllllllllllllllllllllllllllllllllllllllllllll

¿Qué piensa sobre las máquinas que piensan? - La Nación

Domingo 25 de enero de 2015 |

Debates

Más

de 180 científicos, filósofos, escritores y tecnólogos respondieron a

la convocatoria anual del sitio web Edge.org con reflexiones originales

sobre los alcances, riesgos y posibilidades de la inteligencia

artificial, un campo de vanguardia en la ciencia que ya está trayendo el

futuro al presente

La

inteligencia artificial, ¿es uno de los avances más prometedores de la

ciencia contemporánea, o un riesgo para la humanidad? Entre esos dos

polos, con ironía, optimismo o cautela, se movieron los 186 científicos,

escritores y pensadores convocados este año por Edge.org -un sitio web

asociado a una editorial que promueve el pensamiento y la discusión de

vanguardia en ciencias, artes y literatura- para responder a su pregunta

anual. Los colaboradores escribieron ensayos breves, disponibles en la

web (www.edge.org) y que, como todos los años, tendrán en breve su publicación en papel. Aquí, una selección de sus respuestas.

Mentes que existen junto a las nuestras

Pamela McCorduck (escritora, autora de Machines that think)Durante más de cincuenta años he observado el flujo y reflujo de la opinión pública respecto de la inteligencia artificial (IA): es imposible y no puede lograrse; es algo horrendo y destruirá a la raza humana; es significativo; no tiene importancia; es un chiste; nunca será sólidamente inteligente, sólo débilmente; producirá otro Holocausto. Estos extremos últimamente han cedido ante el reconocimiento de que la IA es un fenómeno científico, tecnológico y social -humano- que hace época. Hemos desarrollado una nueva mente para que exista junto a la nuestra. Si la manejamos sabiamente puede dar inmensos beneficios, desde lo planetario hasta lo personal. (...)

Ninguna ciencia o tecnología novel de tal magnitud llega sin desventajas, incluso peligros. Reconocerlos, medirlos y responder a ellos es una tarea de grandes proporciones. Al contrario de lo que dicen los titulares, esa tarea ya ha sido asumida formalmente por expertos en este campo, por los que mejor entienden el potencial y los límites de la IA. En un proyecto llamado AI100, en Stanford, expertos científicos junto a filósofos, especialistas en ética, estudiosos del derecho y otros formados para explorar los valores más allá de las reacciones viscerales, emprenderán este esfuerzo (...)

Esto es lo que creo: deseamos salvarnos y preservarnos como especie. En vez de las deidades imaginarias a las que hemos pedido a lo largo de la historia, que no nos han salvado ni protegido -de la naturaleza, los unos de los otros, de nosotros mismos- finalmente estamos listos para apelar a nuestras propias mentes mejoradas y aumentadas. Es señal de madurez social que asumamos la responsabilidad por nosotros mismos. Somos como dioses, dijo Stewart Brand en una frase famosa, y ya que estamos deberíamos hacerlo bien.

Que piensen por nosotros es el Paraíso

Virgina Heffernan (columnista del New York Times Magazine)Tercerizar a las máquinas las muchas idiosincrasias de los mortales -cometer errores interesantes, rumiar las verdades, aplacar a los dioses cortando y arreglando flores- se inclina hacia lo trágico. ¿Pero dejar que las máquinas piensen por nosotros? Eso suena al paraíso. Pensar es opcional. Pensar es sufrir. Es casi siempre un modo de ser cuidadoso, de prestar atención hipervigilante, de resentir el pasado y temer el futuro en la forma de un lenguaje interno enloquecedoramente redundante. Si las máquinas pueden relevarnos de esta no-responsabilidad onerosa, que en demasiados de nosotros funciona a una sobremarcha sin sentido, estoy a favor.

Dejemos que las máquinas perseveren en dar respuesta a interrogantes tediosos cargados de valores acerca de si la escuela privada o pública es la indicada para mis hijos; si la intervención en Siria es "apropiada"; si lo "peor" para un organismo son los gérmenes o la soledad. Esto nos liberará a los humanos para que con despreocupación nos dediquemos a jugar, descansar, escribir y cortar flores, los estados de flujo enriquecedores que producen acciones que realmente enriquecen, vivifican y sanan al mundo.

En la práctica y la filosofía, son positivas

Steven Pinker (psicólogo experimental y cognitivo, lingüista, profesor en Harvard)(...) Algunos recientes pasos mínimos hacia máquinas más inteligentes han llevado a revivir una recurrente ansiedad respecto de que nuestro conocimiento nos condenará. Mi visión es que los temores actuales de que las computadoras produzcan desastres son un desperdicio de energía emocional, y que ese escenario se acerca más a las falsas preocupaciones por la supuesta falla catastrófica de las computadoras al llegar el año 2000 (conocido en inglés como la falla Y2K, n. del t.) que al Proyecto Manhattan (que llevó a la creación de la bomba atómica, n. del t.)

Por empezar, tenemos mucho tiempo para planificar esto. La IA de nivel humano aún está a 15-25 años de distancia, como siempre lo ha estado, y muchos de sus avances difundidos recientemente tienen raíces cortas. Es cierto que en el pasado varios "expertos" han rechazado cómicamente la posibilidad de avances tecnológicos que se dieron rápidamente. Pero esto va en los dos sentidos: ha habido "expertos" que anunciaron (o se asustaron de) avances inminentes que nunca se dieron, como autos a energía nuclear, ciudades submarinas, colonias en Marte, bebes diseñados y depósitos de zombis que se mantienen vivos para proveer a la gente órganos de repuesto. (...)

Una vez que dejamos de lado las tramas de desastres de ciencia ficción, la posibilidad de la inteligencia artificial avanzada es algo para entusiasmarse y no sólo por sus beneficios prácticos, como los avances fantásticos en materia de seguridad, ocio y protección del medio ambiente incorporada a los automóviles sin chofer, sino también por sus posibilidades filosóficas. La teoría computacional de la mente nunca explicó la existencia de la conciencia en el sentido de la subjetividad en primera persona (aunque es perfectamente capaz de explicar la existencia de conciencia en el sentido de información accesible y reproducible). Una sugerencia es que la subjetividad es inherente a cualquier sistema cibernético suficientemente complicado. Solía creer que esta hipótesis (y sus alternativas) eran permanentemente indemostrables. Pero imaginemos un robot inteligente programado para monitorear sus propios sistemas y plantear interrogantes científicos. Si, sin impulso exterior, se preguntara por qué tiene experiencias subjetivas, yo tomaría la idea con seriedad.

Nos liberan para ser más humanos

Irene Pepperberg (psicóloga y etóloga, profesora en Harvard)Si bien las máquinas son maravillosas en computación, no son demasiado buenas para pensar.

Las máquinas tienen una disponibilidad inagotable de resistencia y perseverancia y, como otros han dicho, sin esfuerzo producen la respuesta a un problema matemático complicado o nos orientan a través del tráfico en una ciudad desconocida, todo en base a algoritmos y programas instalados por humanos. ¿Pero qué les falta a las máquinas?

Las máquinas (al menos hasta ahora y no creo que esto cambie singularmente) no tienen visión. Y no quiero decir vista. Las máquinas no inventan por su cuenta la nueva aplicación exitosa. Las máquinas no deciden explorar galaxias distantes; hacen un gran trabajo cuando las enviamos, pero esa es una historia diferente. Las máquinas por cierto son mejores que la persona promedio para resolver problemas de cálculo y mecánica cuántica, pero no tienen la visión para comprender la necesidad de hacerlo. Las máquinas pueden ganarles a los humanos al ajedrez, pero no han diseñado el tipo de juego mental que llame la atención a los humanos durante siglos. Las máquinas pueden ver regularidades estadísticas que mi débil cerebro no percibe, pero no pueden dar el salto visionario que conecta conjuntos de datos dispares para definir un nuevo campo. (...)

Mi preocupación por tanto no son las máquinas que piensan, sino más bien una sociedad complaciente, que podría renunciar a sus visionarios a cambio simplemente de eliminar el trabajo pesado. Los humanos tienen que aprovechar toda la capacidad cognitiva que se libera cuando las máquinas se hacen cargo del trabajo aburrido -y estar agradecidos de esa liberación y usar esa liberación- para canalizar toda esa capacidad hacia el duro trabajo de resolver los problemas urgentes que demandan saltos perspicaces y visionarios.

Es hora de que lleguen a la madurez

Thomas A. Bass (escritor, profesor de literatura e historia)Pensar es bueno. Entender es mejor. Crear es lo mejor. Estamos rodeados de máquinas cada vez más pensantes. El problema está en su carácter mundano. Piensan en aterrizar aviones y vender cosas. Piensan en vigilancia y censura. Su pensamiento es simple, si no nefasto. Se dijo que el año pasado una computadora pasó la Prueba Turing. Pero la aprobó como un chico de trece años, lo que está bastante bien, considerando las preocupaciones de nuestras máquinas inmaduras. No veo la hora de que nuestras máquinas maduren, para que tengan más poesía y humor. Éste debería ser el proyecto artístico del siglo, con fondos del Estado, fundaciones, universidades, empresas. Todos tienen intereses en lograr que nuestro pensamiento sea más reflexivo, que aumente nuestra comprensión y genere nuevas ideas. Últimamente hemos tomado muchas decisiones tontas, basadas en mala información o demasiada información o la incapacidad de comprender lo que la información significa.

Tenemos numerosos problemas por confrontar y soluciones para encontrar. Empecemos a pensar. Empecemos a crear. Pidamos más funk, más soul, más poesía y arte. Reduzcamos la vigilancia y las ventas. Necesitamos más programadores artistas y programación artística. Es hora de que nuestras máquinas que piensan superen una adolescencia que ha durado más de sesenta años.

No son artificiales, son diseñadas

Paul Davies (físico teórico, cosmólogo, investigador)Los debates acerca de la IA nos remontan a la década de 1950 y es hora de dejar de usar el término "artificial" en relación a la IA por completo. Lo que realmente queremos decir es "Inteligencia Diseñada" (ID). En el habla popular, palabras como "artificial" y "máquina" se usan como opuesto a "natural" y refieren a robots metálicos, circuitos electrónicos y computadoras digitales por oposición a organismos biológicos vivos, pulsantes, pensantes. La idea de un aparato metálico con tripas cableadas con derechos o que desobedece las leyes humanas no sólo da escalofríos, es absurdo. Pero decididamente este no es el rumbo que lleva la ID.

Muy pronto va a desvanecerse la distinción entre artificial y natural. La Inteligencia Diseñada va a basarse cada vez más en la biología sintética y en la fabricación orgánica, en la que los circuitos neurales se desarrollarán a partir de células modificadas genéticamente y se ordenarán espontáneamente en redes de módulos funcionales. Inicialmente los diseñadores serán humanos, pero pronto serán reemplazadas por sistemas ID más inteligentes, lo que desatará un proceso descontrolado de complejización.

A diferencia del cerebro humano con vínculos laxos vía canales de comunicación, los sistemas ID estarán conectados de modo directo y abarcante, aboliendo todo concepto de "seres" individuales y elevando el nivel de la actividad cognitiva ("pensar") a alturas sin precedentes (...).

En caso de que no estemos solos en el universo, no debemos esperar comunicarnos con seres inteligentes del tipo tradicional de corporeidad y sangre que presenta la ciencia ficción, sino con una ID de muchos millones de años de edad con poder intelectual inimaginable y objetivos incomprensibles.

Un poder limitado, con gran riesgo

Nicholas G. Carr (escritor sobre tecnología, cultura y negocios)Las máquinas que piensan piensan como máquinas. Este hecho puede ser una desilusión para los que anticipan, temerosos o entusiasmados, un alzamiento de robots. Para la mayoría es algo tranquilizador. Nuestras máquinas pensantes no están por superarnos intelectualmente de un salto, y mucho menos nos convertirán en sus sirvientes o mascotas. Van a seguir haciendo lo que les piden sus programadores humanos.

Gran parte del poder de la inteligencia artificial deriva de su inconciencia. Inmunes a las vaguedades y prejuicios que son parte del pensamiento consciente, las computadoras pueden realizar cálculos a velocidad del rayo sin distracción, ni fatiga, duda o emoción. (...)

Las cosas se ponen complicadas cuando queremos que las computadoras actúen no como nuestras ayudantes, sino como nuestro reemplazo. Eso es lo que sucede ahora y rápidamente.

Las máquinas pensantes de hoy pueden percibir el medio que las rodea, aprender de la experiencia y tomar decisiones de modo autónomo, a menudo con una velocidad y precisión que van más allá de nuestra capacidad de comprenderlas, mucho menos equipararlas. Cuando se les permite actuar por su cuenta en un mundo complejo, corporizadas en robots o sólo emitiendo juicios derivados de algoritmos, las máquinas inconcientes conllevan enormes riesgos junto con su enorme poder. (...)

Lo que ahora nos debatimos por poner bajo control es precisamente lo que nos ayudó a retomar el control a comienzos del siglo XX: la tecnología informática. Nuestra capacidad de reunir y procesar datos, manipular información en todas sus formas, ha superado nuestra capacidad de monitorear y regular el procesamiento de datos de un modo que sirva a nuestros intereses sociales y personales. El primer paso para responder a este desafío es reconocer que los riesgos de la inteligencia artificial no corresponden a un futuro distópico. Están aquí ahora.

Traducción: Gabriel Zadunaisky.

llllllllllllllllllllllllllllllllllllllllllllllllllllllllllllllllllllllllllllllllllllllllllllllllllllllllllllllllllllllllllllllllllllllllllllllllllllll

llllllllllllllllllllllllllllllllllllllllllllllllllllllllllllllllllllllllllllllllllllllllllllllllllllllllllllllllllllllllllllllllllllllllllllllllllllllllllllllllllllllllllllllllllllllllllllllllllllllllllllll

WHAT DO YOU THINK ABOUT MACHINES THAT THINK?

"Dahlia" by Katinka Matson www.katinkamatson.com

"Dahlia" by Katinka Matson www.katinkamatson.com"Un año más, y algunos de los pensadores y científicos del mundo más importantes han aceptado el desafío intelectual." -El Mundo, 2015

"Deliciosamente creativo, la variedad asombra. Cohetes estelares Intelectuales de impresionante brillantez. Nadie en el mundo está haciendo lo que Edge (Al Borde) está haciendo ... la mayor universidad virtual de investigación en el mundo. —Denis Dutton, Founding Editor, Arts & Letters Daily

_________________________________________________________________

Dedicado a la memoria de Frank Schirrmacher (1959-2014).

Dedicado a la memoria de Frank Schirrmacher (1959-2014).

En los últimos años, la década de 1980 - la era de las discusiones filosóficas acerca de la inteligencia artificial (AI) - si las computadoras pueden "realmente" pensar, se refiere, a si son conscientes, y así - han dado lugar a nuevas conversaciones sobre cómo debemos tratar con las formas que actualmente se implementan. Estos "IA (AI)", si logran "Superintelligence (Super-Inteligencia" (Nick Bostrom), podrían suponer "riesgos existenciales" que nos llevarían a "Our Final Hour (Nuestra hora final)" (Martin Rees). Stephen Hawking recientemente en los titulares internacionales señaló "El desarrollo de la inteligencia artificial total podría significar el fin de la raza humana."

In recent years, the 1980s-era philosophical discussions

about artificial intelligence (AI)—whether computers can "really" think,

refer, be conscious, and so on—have led to new conversations about how

we should deal with the forms that many argue actually are implemented.

These "AIs", if they achieve "Superintelligence" (Nick Bostrom), could pose "existential risks" that lead to "Our Final Hour"

(Martin Rees). And Stephen Hawking recently made international

headlines when he noted "The development of full artificial intelligence

could spell the end of the human race."

_________________________________________________________________

THE EDGE QUESTION—2015

WHAT DO YOU THINK ABOUT MACHINES THAT THINK?

But wait! Should we also ask what machines that think, or,

"AIs", might be thinking about? Do they want, do they expect civil

rights? Do they have feelings? What kind of government (for us) would an

AI choose? What kind of society would they want to structure for

themselves? Or is "their" society "our" society? Will we, and the AIs,

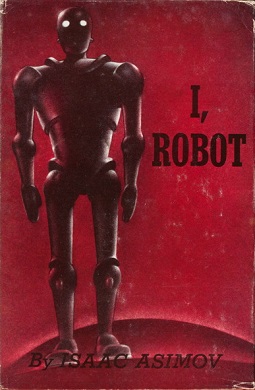

include each other within our respective circles of empathy?Numerous Edgies have been at the forefront of the science behind the various flavors of AI, either in their research or writings. AI was front and center in conversations between charter members Pamela McCorduck (Machines Who Think) and Isaac Asimov (Machines That Think) at our initial meetings in 1980. And the conversation has continued unabated, as is evident in the recent Edge feature "The Myth of AI", a conversation with Jaron Lanier, that evoked rich and provocative commentaries.

Is AI becoming increasingly real? Are we now in a new era of the "AIs"? To consider this issue, it's time to grow up. Enough already with the science fiction and the movies, Star Maker, Blade Runner, 2001, Her, The Matrix, "The Borg". Also, 80 years after Turing's invention of his Universal Machine, it's time to honor Turing, and other AI pioneers, by giving them a well-deserved rest. We know the history. (See George Dyson's 2004 Edge feature "Turing's Cathedral".) So, once again, this time with rigor, the Edge Question—2015:

WHAT DO YOU THINK ABOUT MACHINES THAT THINK?

John BrockmanPublisher & Editor, Edge

llllllllllllllllllllllllllllllllllllllllllllllllllllllllllllllllllllllllllllllllllllllllllllllllllllllllllllllllllllllllllllllllllllllllllllllllllllllllllllllllllllllllllllllllllllllllllllllllllllllllllllll

llllllllllllllllllllllllllllllllllllllllllllllllllllllllllllllllllllllllllllllllllllllllllllllllllllllllllllllllllllllllllllllllllllllllllllllllllllllllllllllllllllllllllllllllllllllllllllllllllllllllllllll

llllllllllllllllllllllllllllllllllllllllllllllllllllllllllllllllllllllllllllllllllllllllllllllllllllllllllllllllllllllllllllllllllllllllllllllllllllllllllllllllllllllllllllllllllllllllllllllllllllllllllllll

For more than fifty years, I've watched the

ebb and flow of public opinion about

artificial intelligence: it's impossible and

can't be done; it's horrendous, and will

destroy the human race; it's significant;

it's negligible; it's a joke; it will never

be strongly intelligent, only weakly so; it

will bring on another Holocaust. These

extremes have lately given way to an

acknowledgment that AI is an epochal

scientific, technological, and social—human—event.

We've developed a new mind, to live side by

side with ours. If we handle it wisely, it

can bring immense benefits, from the

planetary to the personal.

One of AI's futures is imagined as a wise and patient Jeeves to our mentally negligible Bertie Wooster selves: "Jeeves, you're a wonder." "Thank you sir, we do our best." This is possible, certainly desirable. We can use the help. Chess offers a model: Grandmasters Garry Kasparov and Hans Berliner have both declared publicly that chess programs find moves that humans wouldn't, and are teaching human players new tricks. If Big Blue beat Kasparov when he was one of the strongest world champion chess players ever, he and most observers believe that even better chess is played by teams of humans and machines combined. Is this a model of our future relationship with smart machines? Or is it only temporary, while the machines push closer to a blend of our kind of smarts plus theirs? We don't know. In speed, breadth, and depth, the newcomer is likely to exceed human intelligence. It already has in many ways.

No novel science or technology of such magnitude arrives without disadvantages, even perils. To recognize, measure, and meet them is a task of grand proportions. Contrary to the headlines, that task has already been taken up formally by experts in the field, those who best understand AI's potential and limits. In a project called AI100, based at Stanford, scientific experts, teamed with philosophers, ethicists, legal scholars and others trained to explore values beyond simple visceral reactions, will undertake this. No one expects easy or final answers, so the task will be long and continuous, funded for a century by one of AI's leading scientists, Eric Horvitz, who, with his wife Mary, conceived this unprecedented study.

Since we can't seem to stop, since our literature tells us we've imagined, yearned for, an extra-human intelligence for as long as we have records, the enterprise must be impelled by the deepest, most persistent of human drives. These beg for explanation. After all, this isn't exactly the joy of sex.

Any scientist will say it's the search to know. "It's foundational," an AI researcher told me recently. "It's us looking out at the world, and how we do it." He's right. But there's more.

Some say we do it because it's there, an Everest of the mind. Others, more mystical, say we're propelled by teleology: we're a mere step in the evolution of intelligence in the universe, attractive even in our imperfections, but hardly the last word.

Entrepreneurs will say that this is the future of making things—the dark factory, with unflagging, unsalaried, uncomplaining robot workers—though what currency post-employed humans will use to acquire those robot products, no matter how cheap, is a puzzle to be solved.

Here's my belief: We long to save and preserve ourselves as a species. For all the imaginary deities throughout history we've petitioned, which failed to save and protect us—from nature, from each other, from ourselves—we're finally ready to call on our own enhanced, augmented minds instead. It's a sign of social maturity that we take responsibility for ourselves. We are as gods, Stewart Brand famously said, and we may as well get good at it.

We're trying. We could fail.

One of AI's futures is imagined as a wise and patient Jeeves to our mentally negligible Bertie Wooster selves: "Jeeves, you're a wonder." "Thank you sir, we do our best." This is possible, certainly desirable. We can use the help. Chess offers a model: Grandmasters Garry Kasparov and Hans Berliner have both declared publicly that chess programs find moves that humans wouldn't, and are teaching human players new tricks. If Big Blue beat Kasparov when he was one of the strongest world champion chess players ever, he and most observers believe that even better chess is played by teams of humans and machines combined. Is this a model of our future relationship with smart machines? Or is it only temporary, while the machines push closer to a blend of our kind of smarts plus theirs? We don't know. In speed, breadth, and depth, the newcomer is likely to exceed human intelligence. It already has in many ways.

No novel science or technology of such magnitude arrives without disadvantages, even perils. To recognize, measure, and meet them is a task of grand proportions. Contrary to the headlines, that task has already been taken up formally by experts in the field, those who best understand AI's potential and limits. In a project called AI100, based at Stanford, scientific experts, teamed with philosophers, ethicists, legal scholars and others trained to explore values beyond simple visceral reactions, will undertake this. No one expects easy or final answers, so the task will be long and continuous, funded for a century by one of AI's leading scientists, Eric Horvitz, who, with his wife Mary, conceived this unprecedented study.

Since we can't seem to stop, since our literature tells us we've imagined, yearned for, an extra-human intelligence for as long as we have records, the enterprise must be impelled by the deepest, most persistent of human drives. These beg for explanation. After all, this isn't exactly the joy of sex.

Any scientist will say it's the search to know. "It's foundational," an AI researcher told me recently. "It's us looking out at the world, and how we do it." He's right. But there's more.

Some say we do it because it's there, an Everest of the mind. Others, more mystical, say we're propelled by teleology: we're a mere step in the evolution of intelligence in the universe, attractive even in our imperfections, but hardly the last word.

Entrepreneurs will say that this is the future of making things—the dark factory, with unflagging, unsalaried, uncomplaining robot workers—though what currency post-employed humans will use to acquire those robot products, no matter how cheap, is a puzzle to be solved.

Here's my belief: We long to save and preserve ourselves as a species. For all the imaginary deities throughout history we've petitioned, which failed to save and protect us—from nature, from each other, from ourselves—we're finally ready to call on our own enhanced, augmented minds instead. It's a sign of social maturity that we take responsibility for ourselves. We are as gods, Stewart Brand famously said, and we may as well get good at it.

We're trying. We could fail.

I am a machine that thinks, made of atoms—a perfect quantum simulation of a many-body problem—a 1029 body problem. I, robot, am dangerously capable of self-reprogramming and preventing others from cutting off my power supply. We human machines extend our abilities via symbiosis with other machines—expanding our vision to span wavelengths beyond the mere few nanometers visible to our ancestors, out to the full electromagnetic range from picometer to megameter. We hurl 370 kg hunks of our hive past the sun at 252,792 km/hr. We extend our memory and math by a billion-fold with our silicon prostheses. Yet our bio-brains are a thousand-fold more energy efficient than our inorganic-brains at tasks where we have common ground (like facial recognition and language translation) and infinitely better for tasks of, as yet, unknown difficulty, like Einstein’s Annus Mirabilis papers, or out-of-the-box inventions impacting future centuries. As Moore’s Law heads from 20-nm transistor lithography down to 0.1 nm atomic precision and from 2D to 3D circuits, we may downplay reinventing and simulating our biomolecular-brains and switch to engineering them.

We can back-up petabytes of sili-brains perfectly in seconds, but transfer of information between carbo-brains takes decades and the similarity between the copies is barely recognizable. Some speculate that we could translate from carbo to sili, and even get the sili version to behave like the original. However, such a task requires much deeper understanding than merely making a copy. We harnessed the immune system via vaccines in 10th century China and 18th century Europe, long before we understood cytokines and T-cell receptors. We do not yet have a medical nanorobot of comparable agility or utility. It may turn out that making a molecularly adequate copy of a 1.2 kg brain (or 100 kg body) is easier than understanding how it works (or than copying my brain to a room of students "multitasking" with smart phone cat videos and emails). This is far more radical than human cloning, yet does not involve embryos.

What civil rights issues arise with such hybrid machines? A bio-brain of yesteryear with nearly perfect memory, which could reconstruct a scene with vivid prose, paintings or animation was permissible, often revered. But we hybrids (mutts) today, with better memory talents are banned from courtrooms, situation rooms, bathrooms and "private" conversations. Car license plates and faces are blurred in Google Street View—intentionally inflicting prosopagnosia. Should we disable or kill Harrison Bergeron? What about votes? We are currently far from universal suffrage. We discriminate based on maturity and sanity. If I copy my brain/body, does it have a right to vote, or is it redundant? Consider that the copies begin to diverge immediately or the copy could be intentionally different. In addition to passing the maturity/sanity/humanity test, perhaps the copy needs to pass a reverse-Turing test (a Church-Turing test?). Rather than demonstrating behavior indistinguishable from a human, the goal would be to show behavior distinct from human individuals. (Would the current US two-party system pass such a test?) Perhaps the day of corporate personhood (Dartmouth College v. Woodward – 1819) has finally arrived. We already vote with our wallets. Shifts in purchasing trends result in differential wealth, lobbying, R&D priorities, etc. Perhaps more copies of specific memes, minds and brains will come to represent the will of "we the (hybrid) people" of the world. Would such future Darwinian selection lead to disaster or to higher emphasis on humane empathy, aesthetics, elimination of poverty, war and disease, long-term planning—evading existential threats on even millennial time frames? Perhaps the hybrid-brain route is not only more likely, but also safer than either a leap to an unprecedented, unevolved, purely silicon-based brains—or sticking to our ancient cognitive biases with fear-based, fact-resistant voting.

1. "Thinking" is a word we apply with no discipline whatsoever to a huge variety of reported behaviors. "I think I'll go to the store" and "I think it's raining" and "I think therefore I am" and "I think the Yankees will win the World Series" and "I think I am Napoleon" and "I think he said he would be here, but I'm not sure," all use the same word to mean entirely different things. Which of them might a machine do someday? I think that's an important question.

2. Could a machine get confused?

Experience cognitive dissonance? Dream?

Wonder? Forget the name of that guy over

there and at the same time know that it

really knows the answer and if it just

thinks about something else for a while

might remember? Lose track of time? Decide

to get a puppy? Have low self-esteem? Have

suicidal thoughts? Get bored? Worry? Pray? I

think not.

3. Can artificial mechanisms be

constructed to play the part in gathering

information and making decisions that human

beings now do? Sure, they already do. The

ones that control the fuel injection on my

car are a lot smarter than I am. I think I'd

do a lousy job of that.

4. Could we create machines that go

further and act without human supervision in

ways that prove to be very good or very bad

for human beings? I guess so. I think I'll

love them except when they do things that

make me mad—then they'll really be like

people. I suppose they could run amok and

create mass havoc, but I have my doubts. (Of

course, if they do, nobody will care what I

think.)

5. But nobody would ever ask a machine

what it thinks about machines that think.

It's a question that only makes sense if we

care about the thinker as an autonomous and

interesting being like ourselves. If

somebody ever does ask a machine this

question, it won't be a machine any more. I

think I'm not going to worry about it for a

while. You may think I'm in denial.

6. When we get tangled up in this

question, we need to ask ourselves just what

it is we're really thinking about.

There is big confusion about thinking

machines, because two questions always

get mixed up. Question 1 is how close

to thinking are the machines we have built,

or are going to build soon. The answer is

easy: immensely far. The gap between our

best computers and the brain of a child is

the gap between a drop of water and the

Pacific Ocean. Differences are in

performance, structural, functional, and

more. Any maundering about how to deal with

thinking machines is totally premature to

say the least.

Question 2 is whether building a thinking

machine is possible at all. I have never

really understood this question. Of course

it is possible. Why shouldn’t it? Anybody

who thinks it's impossible must believe

something like the existence of

extra-natural entities, transcendental

realities, black magic, or similar. He/she

must have failed to digest the ABC's of

naturalism: we humans are natural creatures

of a natural world. It is not hard to build

a thinking machine: suffice few minutes of a

boy and a girl, and then a few months of the

girl letting things happen. That we haven’t

found other more technological manners yet,

is accidental. If the right combination of

chemicals can perform thinking and feeling

emotions, and it does—the proof being

ourselves—then sure there should be many

other analogous mechanisms for doing the

same.

The confusion stems from mistakes. We

tend to forget that many things behave

differently than few things. Take a Ferrari,

or a supercomputer. Nobody doubts they are

just a (suitably arranged) pile of pieces of

metal and other materials, without black

magic. But if we watch a (non arranged) pile

of material, we usually lack the imagination

for fancying that such a pile could run like

a Ferrari or predict weather like a

supercomputer. Similarly, if we see a bunch

of material, we generally lack the

imagination for fancying that (suitably

arranged) it could discuss like Einstein or

sing like Joplin. But it might—proofs being

Albert and Janis. Of course it takes quite

some arranging and details, and a "thinking

machine" takes a lot of arranging and

details. This is why it is so hard for us to

build one, besides the boy-girl way.

Because of mistakes, we have a view of

natural reality, which is too flat, and this

is the origin of the confusion. The world is

more or less just a large collection of

particles, arranged in various manners. This

is just factually true. But if we then try

to conceive the world precisely as we

conceive an amorphous and disorganised bunch

of atoms, we fail to understand the world.

Because the virtually unlimited

combinatorics of these atoms is so rich to

include stones, water, clouds, trees,

galaxies, rays of light, the colours of the

sunset, the smiles of the girls in the

spring, and the immense black starry night.

As well as our emotions and our thinking

about all this, which are so hard to be

conceived in terms of atoms combinatorics,

not because some black magic intervenes from

outside nature, but because these thinking

machines that are ourselves are, too, much

limited in their thinking capacities.

In the unlikely event our civilisation

lasted long enough and developed enough

technology for actually building something

that thinks and feels like we do—in a manner

different than the boy-girl one, we will

confront these new natural creatures in the

same manner we have always done: in the

manner Europeans and Native Americans

confronted one another, or in the manner we

confront a new previously unknown animal.

With a variable mixture of cruelty, egoism,

empathy, curiosity and respect. Because this

is what we are, natural creatures in a

natural world.

First—what I think about humans who think about machines that think: I think that for the most part we are too quick to form an opinion on this difficult topic. Many senior intellectuals are still unaware of the recent body of thinking that has emerged on the implications of superintelligence. There is a tendency to assimilate any complex new idea to a familiar cliché. And for some bizarre reason, many people feel it is important to talk about what happened in various science fiction novels and movies when the conversation turns to the future of machine intelligence (though hopefully John Brockman's admonition to the Edge commentators to avoid doing so here this will have a mitigating effect on this occasion).

With that off my chest, I will now say what I think about machines that think:

Machines are currently very bad at thinking (except in certain narrow domains).

- They'll probably one day get better at

it than we are (just as machines are

already much stronger and faster than any

biological creature).

- There is little information about how

far we are from that point, so we should

use a broad probability distribution over

possible arrival dates for

superintelligence.

- The step from human-level AI to

superintelligence will most likely be

quicker than the step from current levels

of AI to human-level AI (though, depending

on the architecture, the concept of

"human-level" may not make a great deal of

sense in this context).

- Superintelligence could well be the best thing or the worst thing that will ever have happened in human history, for reasons that I have described elsewhere.

Nevertheless, the degree to which we manage to get our act together will have some effect on the odds. The most useful thing that we can do at this stage, in my opinion, is to boost the tiny but burgeoning field of research that focuses on the superintelligence control problem (studying questions such as how human values can be transferred to software). The reason to push on this now is partly to begin making progress on the control problem and partly to recruit top minds into this area so that they are already in place when the nature of the challenge takes clearer shape in the future. It looks like maths, theoretical computer science, and maybe philosophy are the types of talent most needed at this stage.

That's why there is an effort underway to drive talent and funding into this field, and to begin to work out a plan of action. At the time when this comment is published, the first large meeting to develop a technical research agenda for AI safety will just have taken place.

The Singularity—the fateful moment when AI surpasses its creators in intelligence and takes over the world—is a meme worth pondering. It has the earmarks of an urban legend: a certain scientific plausibility ("Well, in principle I guess it's possible!") coupled with a deliciously shudder-inducing punch line ("We'd be ruled by robots!"). Did you know that if you sneeze, belch, and fart all at the same time, you die? Wow. Following in the wake of decades of AI hype, you might think the Singularity would be regarded as a parody, a joke, but it has proven to be a remarkably persuasive escalation. Add a few illustrious converts—Elon Musk, Stephen Hawking, and David Chalmers, among others—and how can we not take it seriously? Whether this stupendous event takes place ten or a hundred or a thousand years in the future, isn't it prudent to start planning now, setting up the necessary barricades and keeping our eyes peeled for harbingers of catastrophe?

I think, on the contrary, that these alarm calls distract us from a more pressing problem, an impending disaster that won't need any help from Moore's Law or further breakthroughs in theory to reach its much closer tipping point: after centuries of hard-won understanding of nature that now permits us, for the first time in history, to control many aspects of our destinies, we are on the verge of abdicating this control to artificial agents that can't think, prematurely putting civilization on auto-pilot. The process is insidious because each step of it makes good local sense, is an offer you can't refuse. You'd be a fool today to do large arithmetical calculations with pencil and paper when a hand calculator is much faster and almost perfectly reliable (don't forget about round-off error), and why memorize train timetables when they are instantly available on your smart phone? Leave the map-reading and navigation to your GPS system; it isn't conscious; it can't think in any meaningful sense, but it's much better than you are at keeping track of where you are and where you want to go.

Much farther up the staircase, doctors are becoming increasingly dependent on diagnostic systems that are provably more reliable than any human diagnostician. Do you want your doctor to overrule the machine's verdict when it comes to making a life-saving choice of treatment? This may prove to be the best—most provably successful, most immediately useful—application of the technology behind IBM's Watson, and the issue of whether or not Watson can be properly said to think (or be conscious) is beside the point. If Watson turns out to be better than human experts at generating diagnoses from available data it will be morally obligatory to avail ourselves of its results. A doctor who defies it will be asking for a malpractice suit. No area of human endeavor appears to be clearly off-limits to such prosthetic performance-enhancers, and wherever they prove themselves, the forced choice will be reliable results over the human touch, as it always has been. Hand-made law and even science could come to occupy niches adjacent to artisanal pottery and hand-knitted sweaters.

In the earliest days of AI, an attempt was made to enforce a sharp distinction between artificial intelligence and cognitive simulation. The former was to be a branch of engineering, getting the job done by hook or by crook, with no attempt to mimic human thought processes—except when that proved to be an effective way of proceeding. Cognitive simulation, in contrast, was to be psychology and neuroscience conducted by computer modeling. A cognitive simulation model that nicely exhibited recognizably human errors or confusions would be a triumph, not a failure. The distinction in aspiration lives on, but has largely been erased from public consciousness: to lay people AI means passing the Turing Test, being humanoid. The recent breakthroughs in AI have been largely the result of turning away from (what we thought we understood about) human thought processes and using the awesome data-mining powers of super-computers to grind out valuable connections and patterns without trying to make them understand what they are doing. Ironically, the impressive results are inspiring many in cognitive science to reconsider; it turns out that there is much to learn about how the brain does its brilliant job of producing future by applying the techniques of data-mining and machine learning.

But the public will persist in imagining that any black box that can do that (whatever the latest AI accomplishment is) must be an intelligent agent much like a human being, when in fact what is inside the box is a bizarrely truncated, two-dimensional fabric that gains its power precisely by not adding the overhead of a human mind, with all its distractability, worries, emotional commitments, memories, allegiances. It is not a humanoid robot at all but a mindless slave, the latest advance in auto-pilots.

What's wrong with turning over the drudgery of thought to such high-tech marvels? Nothing, so long as (1) we don't delude ourselves, and (2) we somehow manage to keep our own cognitive skills from atrophying.

(1) It is very, very

hard to imagine (and keep in mind) the

limitations of entities that can be such

valued assistants, and the human tendency is

always to over-endow them with

understanding—as we have known since Joe

Weizenbaum's notorious Eliza program of the

early 1970s. This is a huge risk, since we

will always be tempted to ask more of them

than they were designed to accomplish, and

to trust the results when we shouldn't.

(2) Use it or lose it.

As we become ever more dependent on these

cognitive prostheses, we risk becoming

helpless if they ever shut down. The

Internet is not an intelligent agent (well,

in some ways it is) but we have nevertheless

become so dependent on it that were it to

crash, panic would set in and we could

destroy society in a few days. That's an

event we should bend our efforts to averting

now, because it could happen any

day.

The real danger, then, is not machines that

are more intelligent than we are usurping

our role as captains of our destinies. The

real danger is basically clueless machines

being ceded authority far beyond

their competence.How might AIs think, feel, intend, empathize, socialize, moralize? Actually, almost any way we might imagine, and many ways we might not. To stimulate our imagination, we can contemplate the varieties of natural intelligence on parade in biological systems today, and speculate about the varieties enjoyed by the 99% of species that have sojourned the earth and breathed their last—informed by those lucky few that bequeathed fossils to the pantheon of evolutionary history. We are entitled to so jog our imaginations because, according to our best theories, intelligence is a functional property of complex systems and evolution is inter alia a search algorithm which finds such functions. Thus the natural intelligences discovered so far by natural selection place a lower bound on the variety of intelligences that are possible. The theory of evolutionary games suggests that there is no upper bound: With as few as four competing strategies, chaotic dynamics and strange attractors are possible.

When we survey the natural intelligences served up by evolution, we find a heterogeneity that makes a sapiens-centric view of intelligence as plausible as a geocentric view of the cosmos. The kind of intelligence we find congenial is but another infinitesimal point in a universe of alien intelligences, a universe which does not revolve around, and indeed largely ignores, our kind.

For instance, the female mantis Pseudomantis albofimbriata, when hungry, uses sexual deception to score a meal. She releases a pheromone that attracts males, and then dines on her eager dates.

The older chick of the blue-footed booby Sula nebouxii, when hungry, engages in facultative siblicide. It kills its younger sibling with pecks, or evicts it to die of the elements. The mother watches on without interfering.

These are varieties of natural intelligence, varieties that we find at once alien and disturbingly familiar. They break our canons of empathy, society and morality; and yet our checkered history includes cannibalism and fratricide.

Our survey turns up another critical feature of natural intelligence: each instance has its limits, those points where intelligence passes the baton to stupidity.

The greylag goose Anser anser tenderly cares for her eggs—unless a volleyball is nearby. She will abandon her offspring in vain pursuit of this supernormal egg.

The male jewel beetle Julodimorpha bakewelli flies about looking to mate with a female—unless it spies just the right beer bottle. It will abandon the female for the bottle, and attempt to mate with cold glass until death do it part.

Human intelligence also passes the baton. Einstein is quoted as saying, "Two things are infinite, the universe and human stupidity, and I am not yet completely sure about the universe."

Some limits of human intelligence cause little embarrassment. For instance, the set of functions from the integers to the integers is uncountable, whereas the set of computable functions is countable. Therefore almost all functions are not computable. But try to think of one. Turns out it takes a genius, an Alan Turing, to come up with an example such as the halting problem. And it takes an exceptional mind, just short of genius, even to understand the example.

Other limits strike closer to home: diabetics that can't refuse dessert, alcoholics that can't refuse a drink, gamblers that can't refuse a bet. But it's not just addicts. Behavioral economists find that all of us make "predictably irrational" economic choices. Cognitive psychologists find that we all suffer from "functional fixedness," an inability to solve certain trivial problems, such as Duncker's candle box problem, because we can't think out of the box. The good news, however, is that the endless variety of our limits provides job security for psychotherapists.

But here is the key point. The limits of each intelligence are an engine of evolution. Mimicry, camouflage, deception, parasitism—all are effects of an evolutionary arms race between different forms of intelligence sporting different strengths and suffering different limits.

Only recently has the stage been set for AIs to enter this race. As our computing resources expand and become better connected, more niches will appear in which AIs can reproduce, compete and evolve. The chaotic nature of evolution makes it impossible to predict precisely what new forms of AI will emerge. We can confidently predict, however, that there will be surprises and mysteries, strengths where we have weaknesses, and weaknesses where we have strengths.

But should this be cause for alarm? I think not. The evolution of AIs presents risks and opportunities. But so does the biological evolution of natural intelligences. We have learned that the best way to cope with the variety of natural intelligences is not alarm, but prudence. Don't hug rattle snakes, don't taunt grizzly bears, wear mosquito repellant. To deal with the evolving strategies of viruses and bacteria, wash hands, avoid sneezes, get a flu shot. Occasionally, as with ebola, further measures are required. But once again prudence, not alarm, is effective. The evolution of natural intelligences can be a source of awe and inspiration, if we embrace it with prudence rather than spurn it with alarm.

All species go extinct. Homo sapiens will be no exception. We don't know how it will happen—virus, an alien invasion, nuclear war, a super volcano, a large meteor, a red-giant sun. Yes, it could be AIs, but I would bet long odds against it. I would bet, instead, that AIs will be a source of awe, insight, inspiration, and yes, profit, for years to come.

Machines cannot think. They are not going to think any time soon. They may increasingly do more interesting things, but the idea that we need to worry about them, regulate them, or grant them civil rights, is just plain silly.

The over promising of "expert systems" in the 1980s killed off serious funding for the kind of AI that tries to build virtual humans. Very few people are working in this area today. But, according to the media, we must be very afraid.

We have all been watching too many movies.

There are two choices when you work on AI. One is the "let's copy humans method." The other is the "let's do some really fast statistics-based computing method." As an example, early chess playing programs tried to out compute those they played against. But human players have strategies, and anticipation of an opponent's thinking is also part of chess playing. When the "out compute them" strategy didn't work, AI people started watching what expert players did and started to imitate that. The "out compute them" strategy is more in vogue today.

We can call both of these methodologies AI if we like, but neither will lead to machines that create a new society.

The "out compute them" strategy is not frightening because the computer really has no idea what it is doing. It can count things fast without understanding what it is counting. It has counting algorithms, that's it. We saw this with IBM's Watson program on Jeopardy.

One Jeopardy question was: "It was the anatomical oddity of U.S. Gymnast George Eyser, who won a gold medal on the parallel bars in 1904."

A human opponent answered as follows: "Eyser was missing an arm"—and Watson then said, "What is a leg?" Watson lost for failing to note it the leg was "missing."

Try a Google search on "Gymnast Eyser." Wikipedia comes up first with a long article about him. Watson depends on Google. If a Jeopardy contestant could use Google they would do better than Watson. Watson can translate "anatomical" into "body part" and Watson knows the names of the body parts. Watson did not know what an "oddity" is however. Watson would not have known that a gymnast without a leg was weird. If the question had been "what was weird about Eyser?" the people would have done fine. Watson would not have found "weird" in the Wikipedia article nor have understood what gymnasts do, nor why anyone would care. Try Googling "weird" and "Eyser" and see what you get. Keyword search is not thinking, nor anything like thinking.

If we asked Watson why a disabled person would perform in the Olympics, Watson would have no idea what was even being asked. It wouldn't have understood the question, much less have been able to find the answer. Number crunching can only get you so far. Intelligence, artificial or otherwise, requires knowing why things happen, what emotions they stir up, and being able to predict possible consequences of actions. Watson can't do any of that. Thinking and searching text are not the same thing.

The human mind is complicated. Those of us on the "let's copy humans" side of AI spend our time thinking about what humans can do. Many scientists think about this, but basically we don't know that much about how the mind works. AI people try to build models of the parts we do understand. How language is processed, or how learning works—we know a little—consciousness or memory retrieval, not so much.

As an example, I am working on a computer that mimics human memory organization. The idea is to produce a computer that can, as a good friend would, tell you just the right story at the right time. To do this, we have collected (in video) thousands of stories (about defense, about drug research, about medicine, about computer programming …). When someone is trying to do something, or find something out, our program can chime in with a story it is reminded of that it heard. Is this AI? Of course it is. Is it a computer that thinks? Not exactly.

Why not?

In order to accomplish this task we must interview experts and then we must index the meaning of the stories they tell according to the points they make, the ideas they refute, the goals they talk about achieving, and the problems they experienced in achieving them. Only people can do this. The computer can match the index assigned to other indices, such as those in another story it has, or indices from user queries, or from an analysis of a situation it knows the user is in. The computer can come up with a very good story to tell just in time. But of course it doesn't know what it is saying. It can simply find the best story to tell.

Is this AI? I think it is. Does it copy how humans index stories in memory? We have been studying how people do this for a long time and we think it does. Should you be afraid of this "thinking" program?

This is where I lose it about the fear of AI. There is nothing we can produce that anyone should be frightened of. If we could actually build a mobile intelligent machine that could walk, talk, and chew gum, the first uses of that machine would certainly not be to take over the world or form a new society of robots. A much simpler use would be a household robot. Everyone wants a personal servant. The movies depict robot servants (although usually stupidly) because they are funny and seem like cool things to have.

Why don't we have them? Because having a useful servant entails having something that understands when you tell it something, that learns from its mistakes, that can navigate your home successfully and that doesn't break things, act annoyingly, and so on (all of which is way beyond anything we can do.) Don't worry about it chatting up other robot servants and forming a union. There would be no reason to try and build such a capability into a servant. Real servants are annoying sometimes because they are actually people with human needs. Computers don't have such needs.

We are nowhere near close to creating this kind of machine. To do so, would require a deep understanding of human interaction. It would have to understand "Robot, you overcooked that again," or "Robot, the kids hated that song you sang them." Everyone should stop worrying and start rooting for some nice AI stuff that we can all enjoy.

So, full-blown artificial intelligence (AI) will not spell the 'end of the human race', it is not an 'existential threat' to humans (digression: this now-common use of 'existential' is incorrect), we are not approaching some ill-defined apocalyptic 'singularity', and the development of AI will not be 'the last great event in human history'—all claims that have recently been made about machines that can think.

In fact, as we design machines that get better and better at thinking, they can be put to uses that will do us far more good than harm. Machines are good at long, monotonous tasks like monitoring risks, they are good at assembling information to reach decisions, they are good at analyzing data for patterns and trends, they can arrange for us to use scarce or polluting resources more efficiently, they react faster than humans, they are good at operating other machines, they don’t get tired or afraid, and they can even be put to use looking after their human owners, as in the form of smartphones with applications like Siri and Cortana, or the various GPS route-planning devices most people have in their cars.

Being inherently self-less rather than self-interested, machines can easily be taught to cooperate, and without fear that some of them will take advantage of the other machines’ goodwill. Groups (packs, teams, bands, or whatever collective noun will eventually emerge—I prefer the ironic jams) of networked and cooperating driverless cars will drive safely nose-to-tail at high-speeds: they won’t nod off, they won’t get angry, they can inform each other of their actions and of conditions elsewhere, and they will make better use of the motorways, which now are mostly unoccupied space (owing to humans' unremarkable reaction times). They will do this happily and without expecting reward, and do so while we eat our lunch, watch a film, or read the newspaper. Our children will rightly wonder why anyone ever drove a car.

There is a risk that we will, and perhaps already have, become dangerously dependent on machines, but this says more about us than them. Equally, machines can be made to do harm, but again, this says more about their human inventors and masters than about the machines. Along these lines, there is a strand of human influence on machines that we should monitor closely and that is introducing the possibility of death. If machines have to compete for resources (like electricity or gasoline) to survive, and they have some ability to alter their behaviours, they could become self-interested.

Were we to allow or even encourage self-interest to emerge in machines, they could eventually become like us: capable of repressive or worse, unspeakable, acts towards humans, and towards each other. But this wouldn’t happen overnight, it is something we would have to set in motion, it has nothing to do with intelligence (some viruses do unspeakable things to humans), and again says more about what we do with machines than machines themselves.

So, it is not thinking machines or AI per se that we should worry about but people. Machines that can think are neither for us nor against us, and have no built-in predilections to be one over the other. To think otherwise is to confuse intelligence with aspiration and its attendant emotions. We have both because we are evolved and replicating (reproducing) organisms, selected to stay alive in often cut-throat competition with others. But aspiration isn’t a necessary part of intelligence, even if it provides a useful platform on which intelligence can evolve.

Indeed, we should look forward to the day when machines can transcend mere problem solving, and become imaginative and innovative—still a long long way off but surely a feature of true intelligence—because this is something humans are not very good at, and yet we will probably need it more in the coming decades than at any time in our history.

1. We are They

Francis Crick called it the "Astonishing Hypothesis": that consciousness, also known as Mind, is an emergent property of matter. As molecular neuroscience progresses, encountering no boundaries, and computers reproduce more and more of the behaviors we call intelligence in humans, that Hypothesis looks inescapable. If it is true, then all intelligence is machine intelligence. What distinguishes natural from artificial intelligence is not what it is, but only how it is made.

Of course, that little word "only" is doing some heavy lifting here. Brains use a highly parallel architecture, and mobilize many noisy analog units (i.e., neurons) firing simultaneously, while most computers use von Neumann architecture, with serial operation of much faster digital units. These distinctions are blurring, however, from both ends. Neural net architectures are built in silicon, and brains interact ever more seamlessly with external digital organs. Already I feel that my laptop is an extension of my self—in particular, it is a repository for both visual and narrative memory, a sensory portal into the outside world, and a big part of my mathematical digestive system.

2. They are Us

Artificial intelligence is not the product of an alien invasion. It is an artifact of a particular human culture, and reflects the values of that culture.

3. Reason Is the Slave of the Passions

David Hume's striking statement: "Reason Is, and Ought only to Be, the Slave of the Passions" was written in 1738, long before anything like modern AI was on the horizon. It was, of course, meant to apply to human reason and human passions. (Hume used the word "passions" very broadly, roughly to mean "non-rational motivations".) But Hume's logical/philosophical point remains valid for AI. Simply put: Incentives, not abstract logic, drive behavior.

That is why the AI I find most alarming is its embodiment in autonomous military entities—artificial soldiers, drones of all sorts, and "systems." The values we may want to instill in such entities are alertness to threats and skill in combatting them. But those positive values, gone even slightly awry, slide into paranoia and aggression. Without careful restraint and tact, researchers could wake up to discover they've enabled the creation of armies of powerful, clever, vicious paranoiacs.

Incentives driving powerful AI might go wrong in many ways, but that route seems to me the most plausible, not least because militaries wield vast resources, invest heavily in AI research, and feel compelled to compete with one another. (In other words, they anticipate possible threats and prepare to combat them ... )